AI is reshaping the hiring industry, and not always in ways recruiters are prepared for.

At SocialTalent Live: Tackling AI-Driven Candidate Cheating, industry leaders from Accenture, Splunk, and Britton & Time joined us to discuss how AI-generated applications are affecting hiring, what new compliance laws mean for recruiters, and – most importantly – how talent teams can stay ahead of the curve.

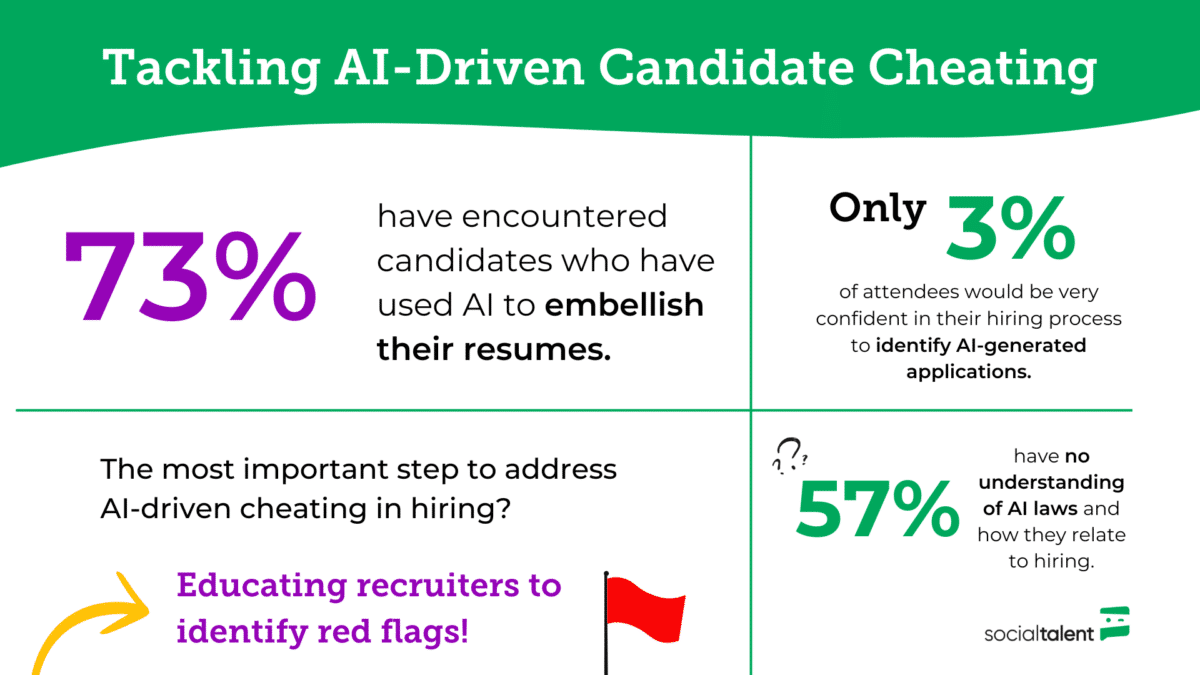

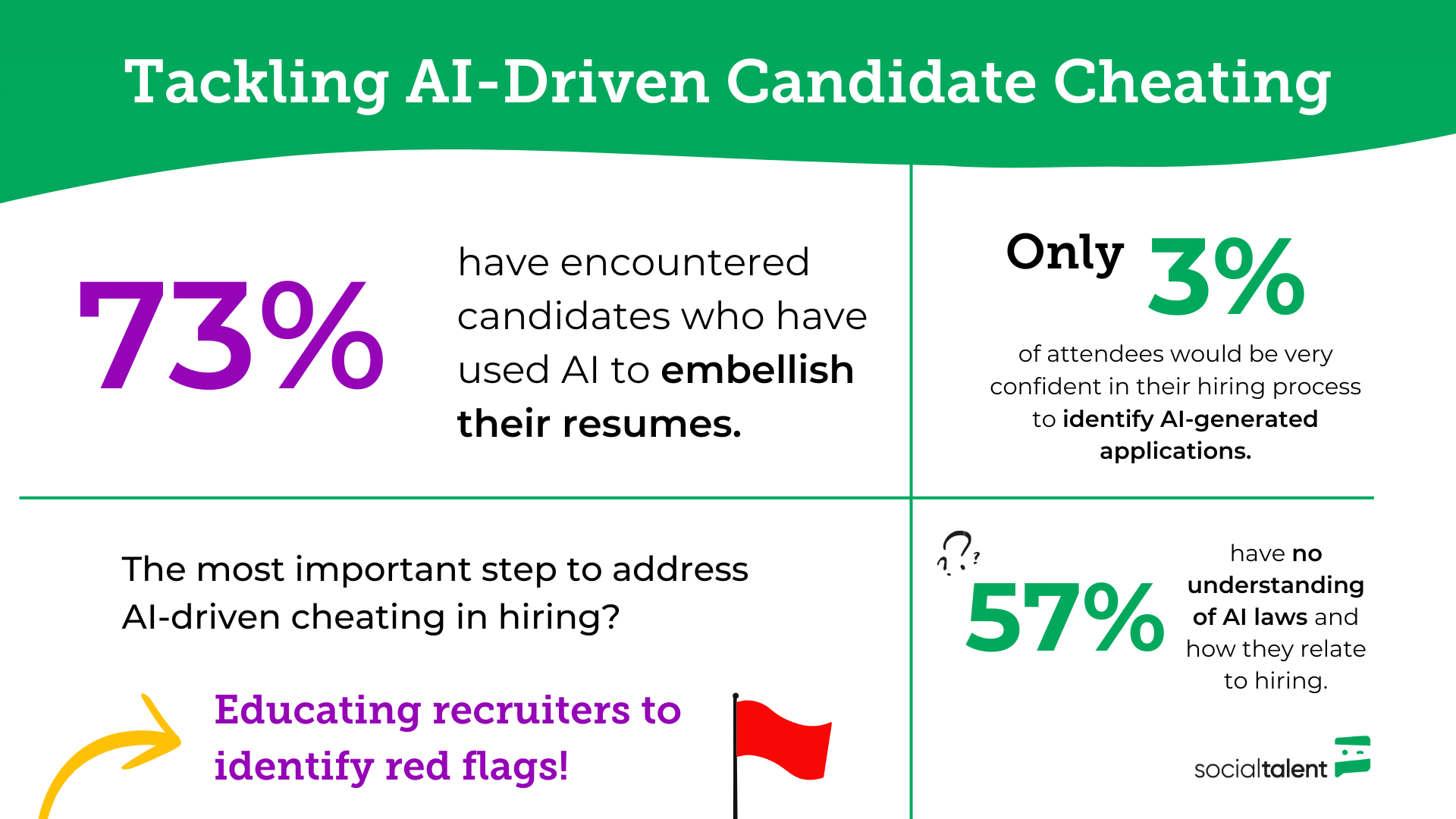

To gauge the audience’s perspective, we ran live polls throughout the event. The results were eye-opening:

- 73% of recruiters have encountered AI-enhanced resumes.

- Only 3% feel very confident in identifying AI-generated applications.

- 57% have no understanding of AI laws and how they relate to hiring.

- The most important step to tackling AI-driven cheating? Educating recruiters to identify red flags.

With AI tools now capable of refining resumes, fabricating experience, and even coaching candidates in real-time during interviews, talent acquisition teams are facing a new and urgent challenge.

So how can they respond?

1. AI-Embellished Resumes Are the New Normal

Key Poll Insight: 73% of recruiters have encountered AI-enhanced resumes.

AI-powered job application tools are making it easier than ever for candidates to tailor their resumes, optimize keyword matching, and even inflate their experience. While some use AI responsibly – to refine language and improve clarity, for example, – others take it further, exaggerating skills and crafting misleading career narratives.

Tom Sayer, Global Recruiting Operations Lead at Accenture, shared just how widespread this issue is. His team has seen a 30% increase in applications, partly driven by AI use. When surveyed, Accenture’s recruiters reported that 29% frequently experience AI-enhanced CVs, matching what external reports suggest in that AI-generated applications could now account for up to 50% of total job submissions!

“Some of this is leading to a sea of sameness. It’s very hard to distinguish between experience when CVs look the same, sound the same, and candidates struggle to demonstrate those skills in real conversations.”

For recruiters, the challenge is clear: How do you differentiate between AI-enhanced applications and real, verifiable skills?

How Companies Are Responding

Organizations are tackling this issue by:

- Moving toward skills-based hiring: Placing greater emphasis on assessments and practical demonstrations of ability rather than just CVs.

- Training recruiters to recognize AI patterns: Such as overly polished, generic language, or resumes that are keyword-heavy but lack substance.

- Introducing proctored assessments: To ensure candidates can actually demonstrate the skills they claim to have.

Learn more: The AI Dilemma Flooding Talent Pipelines

2. Recruiters Are Struggling to Keep Up

Key Poll Insight: Only 3% of recruiters feel very confident in spotting AI-generated applications.

Despite AI-enhanced applications becoming more common, most recruiters aren’t confident in their ability to detect them.

Why? Because AI is evolving faster than traditional hiring methods. The industry is at an inflection point right now. Many teams still rely on outdated hiring practices, while AI-generated content is becoming more sophisticated and harder to detect.

“We need to think about what the acceptable use [of AI] is versus what is cheating.” Tom told us. Tools, processes, and policies are all important to help the recruiter, but we must also help the candidate navigate this. “I think we need to be out there very clearly, on our career sites, within communications, talking to them about what we deem to be acceptable use.”

How Companies Can Adapt

Rather than trying to “catch” AI-enhanced applications, recruiters should focus on identifying real skill and experience. Best practices include:

- Probing deeper in interviews: Particularly if responses feel generic or overly structured, push for specific real-world examples and practical applications of knowledge.

- Structuring assessments to validate skills: Placing a heavier focus on job simulations, live problem-solving, and case studies.

- Encouraging transparency: Guiding candidates on acceptable AI use rather than reacting defensively.

Learn more: Tackling AI-Driven Candidate Cheating

3. AI Laws Are a Blind Spot for Most Companies

Key Poll Insight: 57% of recruiters have no understanding of AI laws and how they impact hiring.

The legal landscape around AI in hiring is rapidly evolving, but most recruiters aren’t aware of their responsibilities.

The EU AI Act, which came into force in August 2024, classifies AI-driven hiring as high-risk and requires organizations to ensure:

- Transparency – candidates must be explicitly informed if AI is used in the hiring process.

- Explainability – employers must be able to clearly articulate how AI-driven decisions are made.

- Traceability – AI systems must provide an audit trail showing their decision-making logic.

- Human Oversight – AI cannot fully automate hiring decisions; a human must always be involved.

- Candidate Consent – Applicants must actively opt-in to AI-driven processes, ideally through multiple consent steps to ensure compliance.

“The EU AI Act has global reach.” Paul Britton explained. “And you don’t want to be caught out by this. The penalties can be quite steep. They’re up to €27 million, or 7% of your global turnover, in the most punitive circumstances for non-compliance.“

What Companies Should Do

- Audit AI-powered hiring tools to ensure compliance.

- Require vendors to be transparent about how AI models make decisions.

- Train hiring teams on AI-related legal risks to avoid future penalties.

4. The Best Solution? Recruiter Education

Key Poll Insight: The most important step to addressing AI-driven cheating? Educating recruiters to identify red flags.

AI isn’t going away. And the best way forward is to train recruiters to recognize AI use, probe deeper, and focus on skill validation.

When your recruiters have a solid foundation of knowledge about what good looks like from a candidate, even the best AI will fail to match this. Strong processes, inquisitive questioning, and due diligence have a way of finding the best talent.

“We’re not trying to have interviewers be vigilantes or experts in detecting AI. We are trying to have them be experts in detecting skill validation.” – Allie Wehling, Splunk.

Splunk themselves has taken a proactive approach, ensuring:

- Recruiters and hiring managers are educated on AI use and trained to guide candidates on acceptable AI practices.

- Candidates receive clear guidance upfront on what AI use is allowed (e.g., grammar/spell-checking) and what crosses the line (e.g., fabricating experience).

This transparency-first approach builds trust and fairness in the hiring process while ensuring candidates aren’t penalized for responsible AI use, while recruiters feel empowered to seek out the best talent.

Learn more: Discover how SocialTalent can help upskill your recruiting and hiring teams

Final Thoughts: Adapt or Fall Behind

AI-driven candidate cheating isn’t a passing trend, it’s a growing reality of hiring. But companies that embrace AI responsibly will have a competitive edge.

So, remember:

Recruiters need training – focus on identifying real-world ability.

Transparency wins – clear expectations lead to fairer hiring.

The future of hiring belongs to teams that evolve with AI, not against it.

Don’t forget to join us for our next SocialTalent Live event where we’ll be discussing Hiring Maturity Models. Sign-up NOW!

The post AI, Cheating, and Hiring: How Talent Teams Can Stay Ahead appeared first on SocialTalent.