Generative AI is showing up in HR applications in a big way, and interest in the tools is strong. I’ve identified exactly 100 AI use cases in HR, and I’m confident I could add many more to that list. But, are HR departments taking the time to really understand these new capabilities and, more importantly, how others outside the company will use them as well? My recent interactions throughout the industry suggest that HR leaders need to pause the purchasing enthusiasm for a bit and do some soul-searching and research first.

What HR teams will need are:

- new HR policies regarding the planned (or banned) use of generative AI;

- deeper understanding of newer AI tools and the specific risks, security and other challenges they could possibly present;

- new workflows for affected HR activities; and

- new countermeasures based on how job seekers, employees and others might use generative AI tools

Let’s look at each of these areas.

Should HR object to AI-assisted resumes?

I recently spoke to an audience of about 700 HR professionals on the intersection of AI and HR. Things got interesting when I asked some pointed questions like:

- Would you choose to decline to move a candidate forward in the recruiting process if their resume or cover letter was materially influenced by a generative AI tool?

- How would HR professionals react if they suspected a candidate used generative AI technology to identify potential interview questions the job seeker might get asked and that the tool provided suggested answers?

- Would your HR team acquire some of the new tools that detect AI-generated content that might be present in a job seeker’s resume/application?

What the audience initially registered was that they would consider an applicant whose resume had gotten some help from a generative AI tool. That sentiment, I noticed, started to change once we discussed the matter in a more fulsome manner.

And the same HR executives also thought that HR might want to get tools to look for AI-generated content in job applications, resumes, cover letters and thank-you notes. That sentiment also changed as the topic got discussed.

Here are some of the issues raised:

- Tools that can identify AI-generated verbiage may themselves be AI tools and are subject to the same shortcomings found in other AI tools (e.g., hallucinations, inappropriate language, unsuitable responses, etc.). The use of these AI detection tools might open up the employer to potential litigation if a job seeker’s application was flagged as being AI-assisted when it was not. To that point, an AI tool that looks for plagiarism recently flagged a university student’s paper as plagiarized. The student claims to have used a grammar-checking tool, not an AI content-creation tool.

- A job applicant who uses AI-generated copy for resumes is simply gaming talent acquisition software. Job seekers have been trying to outfox ATS (applicant tracking software) technology for decades with low-tech tricks (e.g., keyword stuffing in a resume) and are now getting more sophisticated and powerful tools to use. Job seekers are definitely using generative AI tools to do a better, more timely and efficient job of keyword stuffing.

- Some job seekers may feel quite justified using these tools, as they provide them a competitive advantage, and employers have not cautioned them to avoid them.

- Some might find it ironic that HR departments that are using AI tools to perform many functions (including AI-assisted job description generators) would want to prohibit job seekers from using similar tools. What’s good for the goose should also be good for the gander?

- Resumes that get enhanced by AI may relegate non-enhanced resumes to the reject pile. In other words, great candidates could be eliminated from consideration, while more marginal candidates with resumes designed to delight an ATS are moving ahead in the process.

See also: Fearful of AI in HR? How to work better with the tech

In the situation where an employer is casting for a wide net of applicants, those resumes that have been keyword stuffed and tarted-up by a generative AI will have a sameness about them that isn’t actually true. Employers that don’t look at what is (or is starting to) happen to their recruiting practices and results are in for a shock. The biggest casualties in recruiting may be:

- scores of resumes will appear to be the same;

- scores of candidates will appear to be great candidates, and it will be difficult to differentiate between them;

- “authentic” job seekers and their paperwork will never be seen, as AI-using job seekers will advance in ATS scoring while those who don’t use AI will not get any notice; and

- the actual quality of applicants being considered for in-person interviews may decline.

It saddened me to note that some companies are so focused on filling job vacancies now that they don’t care whether resumes are AI-enhanced or not. Ugh! This “the end justifies the means” kind of thinking is both unappetizing and depressing. If your firm isn’t winning its war for talent, taking generative AI shortcuts may not be the best business decision.

There are two big issues at play here. First, how can a firm understand who the authentic job seeker is if it gets super-sanitized and enhanced materials from the candidate? How much of a gap/delta is acceptable, and will the recruiting process need to be amended to add more tests or personal interviews to ascertain this recruit’s true status? Second, job seekers may currently be savvier about generative AI than recruiting or HR teams.

Recently, USA Today ran a story about a job seeker and noted that she:

“cut and pasted the job description and my resume into ChatGPT and had AI create a list of interview questions … [the job seeker] used ChatGPT to help with an early first draft of a cover letter and to critique her resume based on specific job descriptions. ‘These needed heavy editing, obviously,’ she added, ‘but they used all of the keywords that AI would look for on the receiving end.’”

Playing devil’s advocate, I noted that if the applicant’s resume is much more compelling than their actual skills, is HR incurring additional costs (e.g., more testing and interviewing of candidates) to prevent the hiring of less-qualified candidates? There’s also the opportunity cost of HR spending time and money interviewing AI-enhanced but still less-qualified candidates. What does it cost your firm when you let a great candidate get away because you let less-qualified candidates tie up recruiters’ time? And, I followed that with this question: Does your talent acquisition team ever read the resumes of people your ATS down selects to see if it is actually recommending the right people move forward? One HR person bluntly responded with, “We just don’t have time to do that.”

What I took away from these discussions is that HR professionals are approaching AI in a trusting and naïve manner that could be problematic. They also seem to be looking at AI from one perspective (i.e., their own selfish perspective) and failing to understand how other constituents might view this technology or the consequences it could generate. Having a blind spot toward generative AI can create major problems for unprepared HR teams.

These HR leaders also weren’t of one mind when it came to whether AI usage is acceptable (for HR or job seekers) or not. That’s fascinating, as it shows just how little HR professionals are talking about AI with each other. HR leaders will need to quickly get up to speed on AI, clarify what acceptable uses of the technology will be, understand all related risks (e.g., litigation, data privacy, security, etc.) and create relevant policies that all employees will follow. Apparently, that may not be happening, given the interactions I’ve recently had.

Talk with HR leaders from around the globe about how they’re weighing these AI decisions at HRE’s upcoming HR Technology Conference Europe, May 2-3 in Las Vegas. Click here to register.

An emerging arms race

What no one is saying explicitly is that we’re seeing an arms race in recruiting technology, and HR groups need to have both effective countermeasures and policies to deal with these rapidly escalating challenges. How bad is it? One job seeker used an AI tool to not only perfect his resume but also to pre-fill applications that he submitted to 5,000 firms in one week! Job seekers will swamp talent acquisition teams with tarted-up resumes for jobs that they might not have a great interest in. This is akin to job application spamming, and it does nothing to help employers. Does your recruiting team have a countermeasure for this?

Militaries often possess teams that monitor the new armaments/weapons being deployed by other militaries. They assess how well their current methods and weaponry will perform against these new threats. Where they detect weaknesses in their current tools, they identify countermeasures (i.e., changes in their tactics and weapons).

HR organizations need to monitor competitors, technologies and job seekers to see what new tactics and tools are now possible and what countermeasures are needed in their own firm’s toolset. Generative AI is clearly triggering a need for new countermeasures, as job seekers may be better equipped than HR teams and more aware of the opportunities that new AI tools can offer. HR can’t afford to fall behind in this arms race.

Ask the right questions

I recently asked a major software vendor executive what kinds of questions prospective buyers were asking his firm regarding AI. He said that they usually only inquire as to how they can keep their internal data out of public AI models and not about much else. In other words, the world of HR is being altered materially by AI, and buyers may not be savvy enough to know what they really should acquire, what controls they’ll need and what AI-powered HR technology they should run away from.

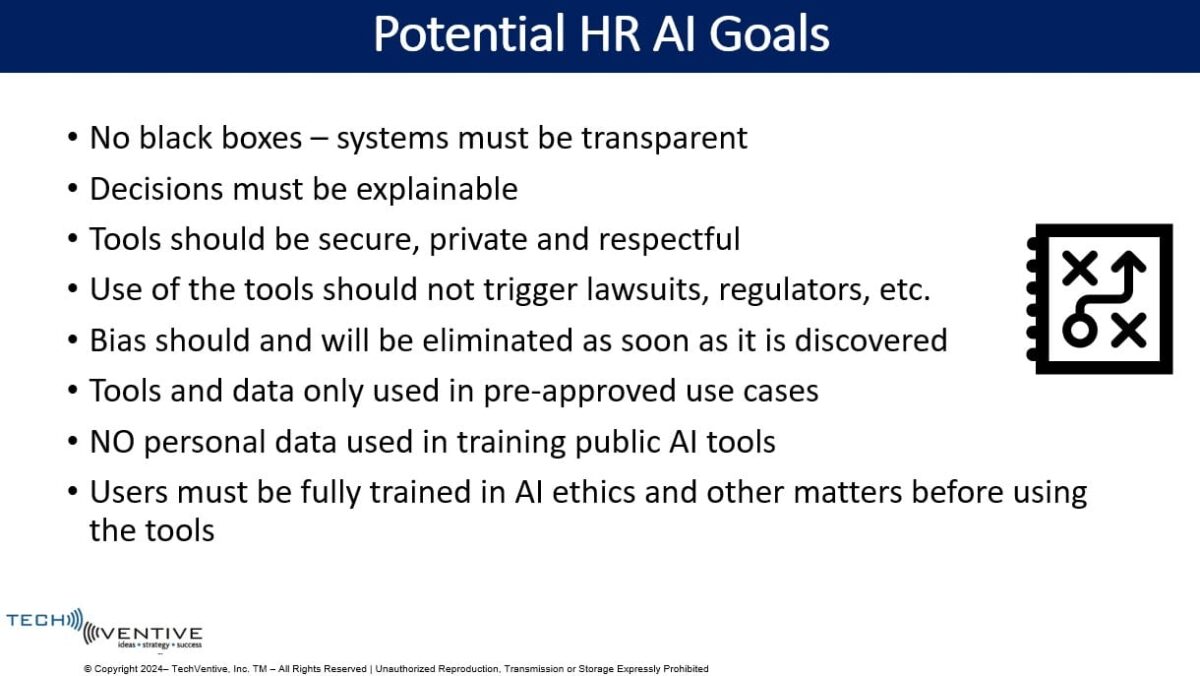

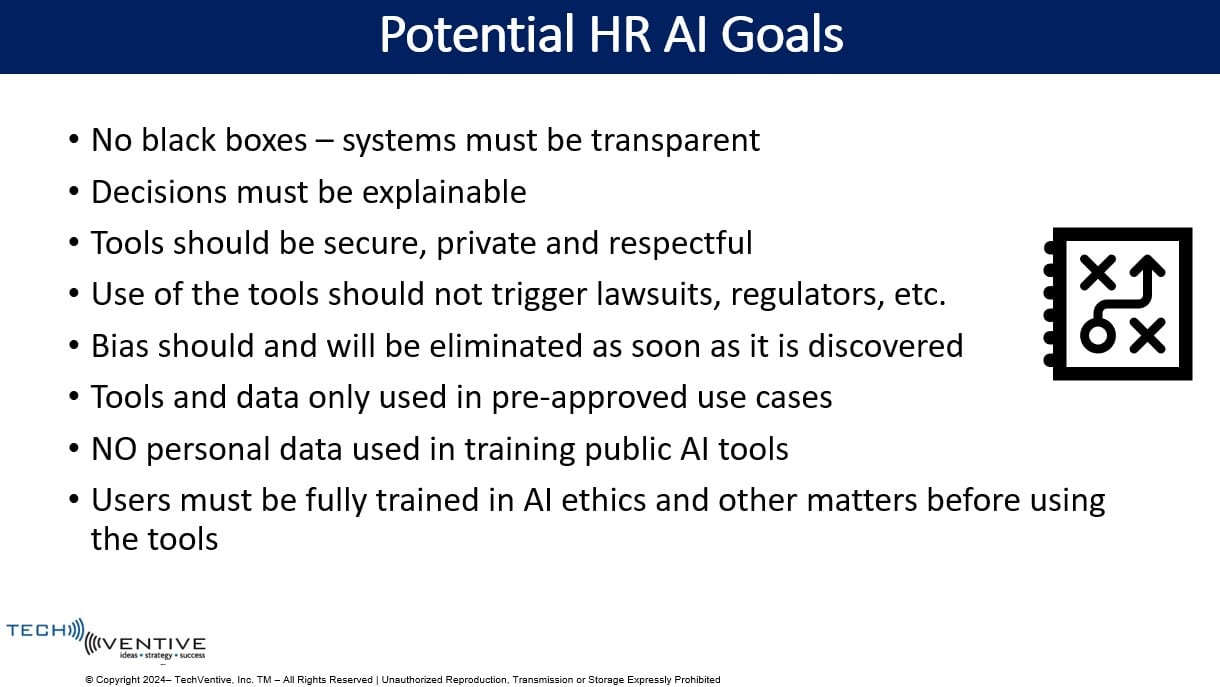

What we’re seeing is that AI adoption and/or interest by HR leaders may be running ahead of needed policies, controls, guidelines, etc. So, before HR teams start entertaining new software purchases, they may want to consider what their AI guiding principles are first.

New policies

Generative AI should trigger discussions within every HR team. Those discussions will likely cover assessments of new AI-powered technologies and how employees, alumni, contractors, job seekers, governments and other constituents will use them. Those discussions will also illuminate whether existing HR processes and policies need to be adjusted.

The key output from these policy and process assessments could trigger new/updated guiding principles for HR’s use of new generative AI tools.

This isn’t optional, and I believe many HR teams will struggle to get full agreement on some matters. For example, what does recruiting do if some recruiters believe it is acceptable for job seekers to use generative AI to create large portions of resumes, cover letters, thank you letters and interview content while other recruiters strongly disagree with this? Some policies will generate new work (or eliminate some work steps altogether) for HR. Process changes are likely, and even the economics of the HR function might warrant review.

Guiding principles might help in evaluating new HRMS software, needed controls, policy changes, process steps and more. And these guiding principles might help prevent HR team members from doing something that exposes the company to unwanted risk and/or litigation.

Get the results you need and want

Some of the new AI tools are relatively trouble-free (e.g., benefits Q&A chatbots) while those that try to ascertain the truthfulness of a job interviewee’s responses have their challenges (and may trigger lawsuits). Not every AI-powered HR tool will be a great tool or be of great value to your firm. Each tool has its own risks and rewards, and HR teams need to look at each with a discerning eye.

HR teams need to assess whether each tool will deliver the results their organization desires. More specifically, HR should not simply acquire new AI tools without really thinking through how each will deliver competitive advantage—not just competitive parity. This effort mandates that HR leaders become very cosmopolitan—that is, to become quite aware of one’s environment (and not just of one’s firm). This worldly view should impart insights into how others will use (or abuse) these new technologies and what risks or opportunities your firm might see.

HR must get clear on the boundaries of the AI tools it will use and define the bright lines HR won’t cross. It’s time for HR to get ready for the generative AI world and to do so wisely.

The post Is HR really ready for generative AI? Probably not appeared first on HR Executive.